The robots.txt file, or what a bot can do on your website

As we mentioned in a previous blog post, each search engine on the web has its own robots, or bots, constantly crawling sites. These bots are not limited to Google and Bing and Yahoo. Any social media application (think Facebook or Pinterest) has its own crawling bots, as do aggregators such as msn or cnn. How do bots find your website?

The robots.txt File

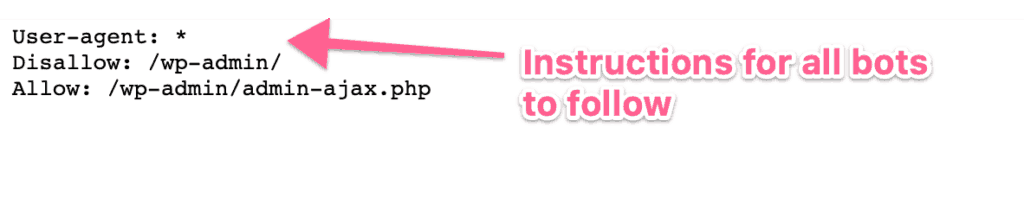

Bots look for a file called robots.txt. This file allows or limits what content on your site these bots are able to see and index. When a bot comes to your site, the first thing it looks for is robots.txt and follows its instructions. If no robots.txt file exists, or there are no exclusions for that bot, then the bot will crawl all pages of your site. The default is to allow crawling, so if there are no disallows, then the bot will begin.

Robots.txt can allow or disallow bots to index certain pages on your site. It also lets you set the parameters for individual bots, called user-agents, and even specify how long a bot should wait between page crawls. Some pages you might want to exclude from an index include test or staging sections or internal data that is not for public consumption, or if you have a substantial amount of images or .pdf documents that you don’t need indexed. But beware, this is not the place to hide sensitive user information. There are other ways to do that.

A few other useful items to know about robots.txt: it must go in your site’s top-level directory, and it is case-sensitive. The name is “robots.txt” not Robots.txt or robot.txt or ROBOTS.txt. Every subdomain may have its own unique robots.txt file. But beware, some malware bots or email address scrapers may choose to ignore your robots.txt file, so it is not foolproof.

Test It

How do you know if bots can find your website? You can check by searching for your domain name with /robots.txt/. You’ll see a text file that may look something like this:

And be aware that anyone can see your robots.txt file – try it! Just add /robots.txt to the end of any domain to see what a site wants crawled.

If you would like to create a robots.txt file, this article from Google is a good reference. This tool lets you test whether you have set the file up correctly.

If you choose to use a robots.txt file, make sure that you have not inadvertently disallowed Googlebot to crawl your site! And if you block a page, remember that any links on that page are also blocked. In order for those links to be crawled, they will need to be on a different accessible page.

There are many more parameters for the robots.txt file. To learn more of the technical aspects of robots.txt, head to this page.